BACHELOR Assignment

[M] Understanding Sparse Neural Networks

Type: Bachelor BIT, CS, EE

Location: Internal

Period: TBD

Student: (Unassigned)

If you are interested please contact :

Description:

Context of the work: Deep Learning (DL) is a very important machine learning area nowadays and it has proven to be a successful tool for all machine learning main paradigms (supervised learning, unsupervised learning, and reinforcement learning). Still, currently, we are missing fundamental understanding of the behavior of neural networks.

Context of the work: Deep Learning (DL) is a very important machine learning area nowadays and it has proven to be a successful tool for all machine learning main paradigms (supervised learning, unsupervised learning, and reinforcement learning). Still, currently, we are missing fundamental understanding of the behavior of neural networks.

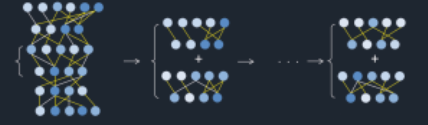

Short description of the assignment: The main goal of this project is to understand the relation between weights magnitude, activation functions, and data normalization techniques in sparse neural networks. The aim is to find the impact of various activation functions on the weight importance. One possible way to quantify weight importance may be its effect on the final accuracy of the model.

Phase 1 – explore various activation functions, various neural network models (e.g. MLP, CNN, LSTM), and the importance of specific weights on the final accuracy by performing various ablation studies.

Phase 2 – design a final systematic evaluation framework and perform experiments.

Phase 3 – extract general concepts/rules, if any.

Phase 4 – prepare the project output: code and report.

Possible expected outcomes: novel insights into neural networks, algorithmic novelty, open-source code, publishable results.

Requirements

- Basic Calculus, Probabilities, and Optimization

- Very good programming skills

- Basic understanding of artificial neural networks

- Analytical skills

Learning Objectives - Upon successful completion of this project, the student will have learnt:

- Understand the fundamental concepts behind neural networks

- Practical skills to work with neural networks and to create an open-source software product

References:

[1] D.C. Mocanu, E. Mocanu, P. Stone, P.H. Nguyen, M. Gibescu, A. Liotta: “Scalable Training of Artificial Neural Networks with Adaptive Sparse Connectivity inspired by Network Science”, Nature Communications, 2018, https://github.com/dcmocanu/sparse-evolutionary-artificial-neural-networks; https://www.nature.com/articles/s41467-018-04316-3

[2] Adam Gaier, David Ha, “Weight Agnostic Neural Networks”, https://arxiv.org/abs/1906.04358, NeurIPS 2019

[3] Utku Evci, Trevor Gale, Jacob Menick, Pablo Samuel Castro, Erich Elsen, “Rigging the Lottery: Making All Tickets Winners”, https://arxiv.org/abs/1911.11134, 2019