BACHELOR Assignment

[M] Continual Learning

Type: Bachelor BIT, CS, EE

Location: Internal

Period: TBD

Student: (Unassigned)

If you are interested please contact :

Description:

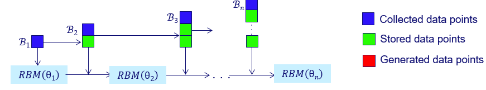

Context of the work: Continual learning [6] is a machine learning paradigm which requires from the agent continuous adaption to a changing environment. It has the potential of pushing Artificial Intelligence well beyond its current boundaries, but currently just timid steps have been made in this direction. Among others, one typical challenge is catastrophic forgetting. It is usually addressed with experience replay [1], but this is not a memory-free method.

Short description of the possible assignments.

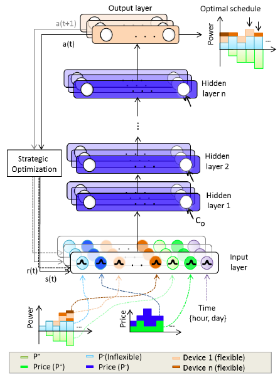

- Use the most straightforward generative replay method [3,4] (the generative model chosen will be a result of your literature survey) to replace experience replay in deep reinforcement learning [2], e.g. [5].

- Find the most suitable generative model to perform generative replay in the deep reinforcement learning context.

- Use generative replay for continual learning in the context of supervised/unsupervised learning [4].

- Define your topic.

Phase 1 – perform a literature survey

Phase 2 – devise a method(ology), systematic study, etc. to be able to address the chosen subtopic.

Phase 3 – perform well-designed experiments and draw conclusions

Phase 4 – prepare the project output: code and report

Possible expected outcomes: novel methods to perform memory-free continual learning, open-source code, publishable results.

Requirements

- Basic Calculus, Probabilities, and Optimization

- Good programming skills

- Basic understanding of artificial neural networks and reinforcement learning

Learning Objectives - Upon successful completion of this project, the student will have learnt:

- Understand the fundamental concepts behind continual learning and artificial neural networks

- Practical skills to work with neural networks for continual learning and to create an open-source software product

References:

[1] Lin LJ (1992) Self-improving reactive agents based on reinforcement learning, planning and teaching. Machine Learning 8(3):293–321, DOI 10.1007/BF00992699

[2] Scott Fujimoto, Herke van Hoof, David Meger, “Addressing Function Approximation Error in Actor-Critic Methods”, https://arxiv.org/pdf/1802.09477.pdf, ICML 2018

[3] D.C. Mocanu, M. Torres Vega, E. Eaton, P. Stone, A. Liotta: “Online Contrastive Divergence with Generative Replay: Experience Replay without Storing Data”, https://arxiv.org/abs/1610.05555, 2016

[4] Hanul Shin, Jung Kwon Lee, Jaehong Kim, Jiwon Kim: “Continual Learning with Deep Generative Replay”, NIPS, 2017

[5] Aswin Raghavan, Jesse Hostetler, Sek Chai, “Generative Memory for Lifelong Reinforcement Learning”, https://arxiv.org/abs/1902.08349, 2019

[6] https://sites.google.com/view/continual2018