This page contains information for teachers.

- Advisory Board I-Tech

Although the I-Tech programme always had a good connection with the work field and a work field survey had been carried out, a professional advisory board was never formalized. Now, in 2019, a professional advisory board has been established. The advisory board has 5 members and will meet once a year. The board will help the I-Tech programme in the following ways:

- A sounding board for technological innovation and trends in technology, media and arts.

- Giving the programme advice on curriculum contents

- Help building a network in which experiences can be exchanged and help the programme to find

- relevant and realistic student projects in industry, and

- guest lecturers

Contacts between the I-Tech programme and board members do not have to be restricted to the regular meetings. This board is at the moment formed by the following members:

- Assesment committee I-Tech

The EC is commissioned to set out policy for safeguarding quality of testing and examination. In this document, the general approach for evaluating the assessment of courses and the final qualifications of the master programme I-Tech within the Master Programme Interaction Technology (I-Tech) is described.

- Work Method Assessment Committee I-Tech

WORK METHOD ASSESSMENT COMMITTEE I-TECH

1. Introduction

The examination board is commissioned to set out policy for safeguarding quality of testing and examination[1]. On this webpage, the general approach for evaluating the assessment of courses and the final qualifications of the master programme I-Tech within the Master Programme Interaction Technology (I-Tech) is described.

2. Starting point

In order to carry out the responsibility of monitoring the quality of the assessment of the courses and the final qualifications within the master programme I-Tech, the examination board has formed an Assessment Committee. This committee collaborates with the Educational Consultant of the Faculty Electrical Engineering, Mathematics and Computer Science (EEMCS). The members of the Assessment Committee are:

- Ir. J. Scholten (Hans) - Chair

- Dr. Ir. W. Eggink (Wouter)

- Slotman, K.M.J. (Karen) – Educational Consultant

3. Method

3.1. Evaluating the quality of course assessments

On a yearly basis, the quality of the assessment within I-Tech master courses is evaluated through a system of peer reviewing, which means lecturers reviewing lecturers. A maximum of five courses are selected based on criteria such as; Is it a new course? Do the results of the student evaluation show any remarkable outcomes on the questions regarding testing? The Assessment Committee selects the courses for evaluation. In case a course consists of multiple types of tests, the Assessment Committee will indicate which test(s) should be evaluated. Once it is clear which courses will be part of the assessment evaluation, the lecturers are being further informed and instructed about the peer review approach. For this specific type of evaluation, the following elements will be considered[2]:

- Design

- Construction of test(s)

- Test taking

- Preparation students

- Assessing results

- Analysis and evaluation

Peer review approach:

The lecturer of the selected course collects the following information:

- Assessment plan: the overall description on how the course is being assessed, including an assessment matrix.

- Assessment matrix: An overview (and additional explanation) which provides insight in the learning goals and how they are reflected in the questions of the test.

- Test example (latest version)

- Student evaluation (latest version)

- Outcome of Panel discussion - CREEC[3] (if applicable)

The lecturer fills out Annex A and submits all documents to another appointed lecturer within the I-Tech master (involved in a comparable type of course)

The lecturers assigned to evaluate the quality of assessment should have a University Teaching Qualification (UTQ)[4] and can use Annex B for a systematic evaluation approach. After filling out this form, the lecturer of the selected course receives a copy of the evaluation (Annex B), and he writes an improvement plan. Both lecturers are encouraged to collaborate in this part of the process. For example, the evaluating lecturer can further explain the content of the evaluation or he can provide input for the improvement plan. Both the improvement plan and the two forms (Annex A and B) have to be submitted to the members of the Assessment Committee for further analysis and archiving. In case certain trends or remarkable outcomes are detected, the Assessment Committee will inform the programme management to enable further actions if necessary. In case a course undergoes major changes, the Assessment Committee will monitor the student evaluation in the next academic year.

In Annex C, the planning for assessment evaluation is presented, including the selected courses for academic year 2018-2019.

3.2. Evaluating the assessment of the final qualifications

The final qualifications are being assessed in the second year during the final project (30 EC[5]). After successfully finishing this educational element of the curriculum by submitting the thesis and giving a final presentation, the student obtains the master diploma of I-Tech.. This applies only when the student has obtained 120 EC including all mandatory courses of the programme.

Graduation carousel

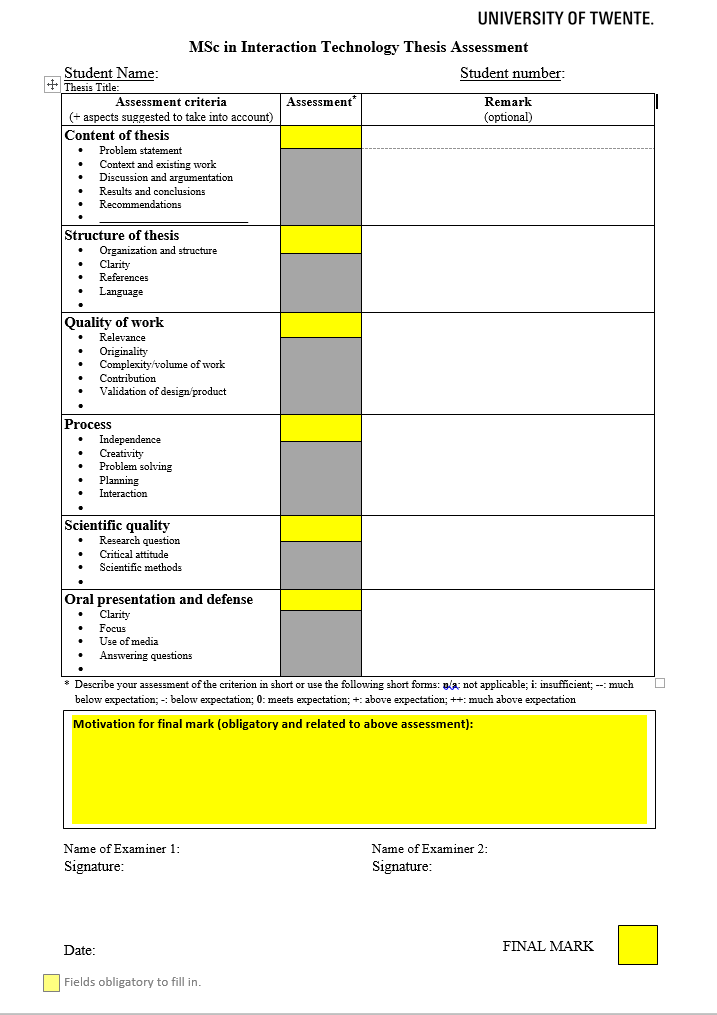

Once a year, the programme management will select a number of final projects and organizes a graduation carousel. The purpose of the graduation carousel is to assess the quality of grading of the final project. Several examiners will be assigned by the programme management to assess the students’ theses again, using the assessment matrix for final projects (see Annex D). The assessment criteria of the final project are:

- Content of the thesis

- Structure of the thesis

- Quality of work

- Process

- Scientific quality

- Oral Presentation and defense

Evaluating approach:

Once a year, the I-Tech programme offers the Assessment Committee the following data:

- An overview of the achieved master theses grades of that academic year

- The final reports of the selected theses, including:

- Original assessment matrixes filled out by 1st (and 2nd) thesis supervisor[6]

- The assessment matrixes and corresponding grades filled out by another examiner.

The Assessment Committee will critically analyze and reflect this data to determine whether the obtained level of the final project equals the intended learning outcomes and if this is in accordance with the final qualifications of the programme. The expectation is that this second assessment by another examiner leads to a comparable final grade. The Assessment Committee provides feedback to the programme on their findings, which may be reason for the programme to formulate improvement points and take proper actions accordingly in order to complete the quality cycle[7].

- Annex A. Presence of required information/materials (lecturer of selected course).

Date of report:

Educational Programme: Interaction Technology (Master)

Course Block (1A;1B;2A;2B):

Name and code:

Name of evaluating lecturer:

Name(s) of involved assessor(s) / involved lecturers:

Name

Explanation

Present?

Remarks

Description

General description of module, course and learning objectives

Assessment scheme

Assessment scheme: learning objectives versus choice for (different types of) test(s) (+ weighting for components which include tests)

Test specification matrix for the test

Test matrix for the test. Including information about amount of questions, level (for instance Bloom’s levels), weighting and conditions.

Test information for the student

Information distributed to students about the test, incl. any example questions or worked-out solutions

Test(s)

Used test(s)

Answer model / key

Including points for each item and parts of answers (open questions).

Re-use of items

Explanation how many items are re-used and what sources has been used

Assessment protocol

Explanation, justification for the way scores and grades are calculated; caesura.

Students results, analysis and reflection

# participants; # pass/fail; scores for items / questions and for all students; grades; frequency; standard deviation. Reflection on the data.

Results items test analysis and reflection

(Psychometric) Results on item level; like Chronbachs α, item difficulty index (P value) and the item total correlation (Rit score) or item rest correlation (Rir).

Reflection.

Special circumstances or problems during test taking and actions taken (if they occurred)

Information about circumstances or problems and actions taken.

Problems (if they occurred) and actions

Problems during the educational process which may have influenced the (results of the) assessment. Course of action.

Feedback afterwards

Possibility for students to get feedback or explanation for given grade.

Reflection

Assessor's comments / reflection / actions (partly in relation to any earlier screening and points for improvement)

Student evaluation(s)

Results of the student evaluation(s) with regard to assessment, based on SEQ or CRITEEC

- Annex B. Peer review - evaluation plan (evaluating lecturer) [8]

Evaluation point 1) Design

Was tested what the students should have learned? (Relationship between learning objectives and test; validity)

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Are the learning objectives clear?

Well formulated?

Is it clear how and whether all the learning objectives of the module(-component) or course are assessed? The weighting of the objectives? Conditions for passing? (assessment scheme)

Is clear which learning objectives were tested by the written test? By what kind of question type? At what level (Bloom or other)? Weighting? Special conditions? (test specification matrix)

Is the test type used adequate? (alignment )

Evaluation point 2) Construction of test(s).

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Are the questions / items of good quality? For MCQ: is the quality of the alternatives adequate?

Did the test consist of enough items to give a reliable

impression of what the students have learned?

Were items used from former / other tests? How many?

Evaluation point 3) Test Taking – Written exam (if applicable)

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Did the test have a cover sheet with relevant information? Were achievable scores for each (sub)question indicated?

Did any problems occur during the test taking? What was the solution?

Were measurements taken to detect or prevent fraud?

Evaluation point 3) Test Taking – Oral exam (if applicable)

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

How were the questions selected? Did all students get the same questions? Were achievable scores for each (sub)question indicated?

Did any problems occur during the test taking? What was the solution?

Were measurements taken to detect or prevent fraud?

Evaluation point 3) Test Taking – Individual or group assignments (if applicable)

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Did any problems occur during the group / individual work? What was the solution?

How was the grade determined? Explain choices

Were measurements taken to detect or prevent fraud?

Evaluation point 3) Test Taking – Projects (if applicable)

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Did the project description

Did any problems occur during the project? What was the solution?

Were measurements taken to detect or prevent fraud?

reflect enough to give a reliable

impression of what the students have learned?

How was the grade determined? Explain choices

Evaluation point 4) Were the students well prepared? (Transparency)

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Was information provided (for the students) about the learning objectives, method for testing and the way the final mark is decided upon?

Was information provided regarding the type of questions, by making practice material or examples available?

Evaluation point 5) Assessing the results

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Is the answer model or answer key adequate? Does it provide the scores for each question? Scores for partly good answers (essay tests)?

Is it clear how the grade has been calculated? (conversion of scores to a grade)? Is the cutting score appropriate? (Does it give adequate guarantee that students achieved the learning objectives? Does it distinguish between ‘poor’ and ‘good’ students? Has the cutting score been justified by the lecturer?)

Was there a possibility for students to get feedback or

explanation for the given grade?

Evaluation point 6) Assessing the results

Judgement

(++; + ;± ;- ;-- or Yes / No)

Explanation / remarks

Is there an overview of the students results?

Was an analysis executed for the students results (final grades)? Did the results show some remarkable issues? Reflection of the examiner? Improvement points?

Was an analysis executed for the students results for all of the exams (if more than one)? Did the results show some

remarkable issues? Reflection of the examiner?

Was an item analysis executed? Did the results show some remarkable issues? Were appropriate action taken if

necessary? Reflection of the examiner?

Was an evaluation executed and did the examiner reflect on the student evaluation results? Does the student evaluation or do complaints gave or give (for the future) reason for actions?

- Annex C. Evaluation assessment courses - planning

Academic year: 2018-2019

Course name

Course code

Teacher(s)

Evaluating teacher

Basics of Impact, Innovation & Entrepreneurship

201800229

dr. K. Zalewska-Kurek

Basic Machine Learning

201600070

dr.ing. G. Englebienne

dr. M. Poel

dr. A.M. Schaafstal

Emperical Methods for Designers

201500008

F. Schuberth

dr. A.M. Schaafstal

Concepts, Measures and Methods

201800226

prof.dr. J.M.C. Schraagen

dr. K. Zalewska-Kurek

Foundations of Interaction Technology

201800234

dr. R. Klaassen

dr. K.P. Truong

Academic year: 2019-2020[9]

Course name

Course code

Teacher(s)

Evaluating teacher

- Annex D. ASSESSMENT MATRIX FINAL PROJECT – MASTER I-TECH

[1] See website of Examination board EEMCS

[2] These aspects are further elaborated in Annex B.

[3] CREEC is a programme committee that organizes panel evaluation meetings every block.

[4] More information can be found on the website of the Centre of expertise in Learning and Teaching (CELT).

[5] EC = European Credit. One EC equals 28 hours of study load.

[6] At least one assessor should have examiner rights and is assigned to grade the student’s final project.

[7] Plan – Do – Check – Act (PDCA)

[8] Based on the Screening points as developed by Centre for Expertise in Learning and teaching (CELT).

[9] To be determined / selected by the assessment committee before the end of block 1B.