HUMAN MEDIA INTERACTION

The Human Media Interaction (HMI) group does research into multimodal interaction: from brain computer interfaces to social robots. It is a multidisciplinary group in which computer science meets social science to investigate and design and evaluate novel forms of human-computer interaction. The HMI group is heavily involved in the MSc. programme “Interaction Technology” and the BSc. programme “Creative Technology”.

Our research

The research of our group concerns the perception-action cycle of understanding human behaviors and generating system responses, supporting an ongoing dialogue with the user. It stems from the premise that understanding the user –by automated evaluation of speech, pose, gestures, touch, facial expressions, social behaviors, interactions with other humans, bio-physical signals and all content humans create– should inform the generation of intuitive and satisfying system responses. Our focus on understanding how and why people use interactive media contributes to making interactive systems more socially capable, safe, acceptable and fun. Evaluation of the resulting systems therefore generally focuses on the perception that the user has of them and the experience that they engender. These issues are investigated through the design, implementation, and analysis of systems across different application areas and across a variety of contexts.

Social Robots

We strive to make robots behave as socially appropriate as possible in a given situation. The basis for modeling such behavior is the in-depth analysis of human-human and human-robot interaction from a social science point of view, taking the user, the system, and the situation into account. Our work results in autonomous robots that are social and that can assist humans in their life, with applications ranging from helping the elderly in their homes to engaging visitors in outdoor touristic sites.

We strive to make robots behave as socially appropriate as possible in a given situation. The basis for modeling such behavior is the in-depth analysis of human-human and human-robot interaction from a social science point of view, taking the user, the system, and the situation into account. Our work results in autonomous robots that are social and that can assist humans in their life, with applications ranging from helping the elderly in their homes to engaging visitors in outdoor touristic sites.

Entertainment computing and body-centric interaction

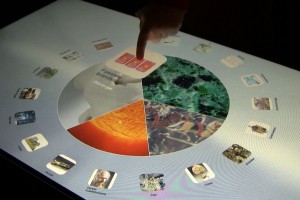

Our research on body-centric interaction focuses on new nonverbal interaction methods, including whole-body, tangible, and tactile interaction. The elicitation as well as the interpretation of emotional and social behavior are essential in this line of research. The interactive systems we create range from wearable devices and smart material interfaces to ambient environments. Example applications include ambient entertainment, interactive playgrounds, mediated social touch, and smart material based visitor guiding systems.

Our research on body-centric interaction focuses on new nonverbal interaction methods, including whole-body, tangible, and tactile interaction. The elicitation as well as the interpretation of emotional and social behavior are essential in this line of research. The interactive systems we create range from wearable devices and smart material interfaces to ambient environments. Example applications include ambient entertainment, interactive playgrounds, mediated social touch, and smart material based visitor guiding systems.

Social VR for Smart, Social, and Sportive Society

Social VR is a technology where people, in the form of 3D virtual representations (avatars), can meet, move, and mingle within the same virtual space. Social VR has been a topic of research at HMI since 2020. We started with a collaboration with an external PhD working on performative prototyping seeing Social VR as a potential design tool for collocated and distributed design and, since then, broadened our investigation into the power of platforms such as Resonite to various domains to create societal impact. We work with a Research through Design approach, gaining new knowledge about the process through active participation in the design process and reflecting on the resulting artifacts, creating new forms of interaction (e.g., fishing emoticons and proximity-sensitive wearables) terms, or guidelines. The work includes various stakeholder-financed, as well as student-initiated research domains, including Sports, Social Interaction and Intimacy, and various Smart uses including seeing it as an educational tool, for instance, for vocabulary acquisition. These projects build on curiosity and personal fascination mixed with insights into the recent evolvement of the domain. The use cases further include steps in combining social engineering aspects of cybersecurity training with more digital components, VR-specific representations of artworks, and interactive experiences to provide a more inclusive view on cultural heritage (including projects with Rembrandthuis and the National Archive). We are constantly looking for further exploration of this field, so feel free to reach out!

Social VR is a technology where people, in the form of 3D virtual representations (avatars), can meet, move, and mingle within the same virtual space. Social VR has been a topic of research at HMI since 2020. We started with a collaboration with an external PhD working on performative prototyping seeing Social VR as a potential design tool for collocated and distributed design and, since then, broadened our investigation into the power of platforms such as Resonite to various domains to create societal impact. We work with a Research through Design approach, gaining new knowledge about the process through active participation in the design process and reflecting on the resulting artifacts, creating new forms of interaction (e.g., fishing emoticons and proximity-sensitive wearables) terms, or guidelines. The work includes various stakeholder-financed, as well as student-initiated research domains, including Sports, Social Interaction and Intimacy, and various Smart uses including seeing it as an educational tool, for instance, for vocabulary acquisition. These projects build on curiosity and personal fascination mixed with insights into the recent evolvement of the domain. The use cases further include steps in combining social engineering aspects of cybersecurity training with more digital components, VR-specific representations of artworks, and interactive experiences to provide a more inclusive view on cultural heritage (including projects with Rembrandthuis and the National Archive). We are constantly looking for further exploration of this field, so feel free to reach out!

Conversational and interactive agents

For a human to have real-time conversations with intelligent (virtual) agents, at HMI, we jointly address three aspects: behavior sensing, modeling and generation. Using computer vision and speech technology, we observe the human conversational participant’s body and head movements, facial expressions, and paralinguistic vocalizations. We detect social cues, which are informative of the stance and intentions of the participant. These are used to model the interaction, and to generate the agent’s appropriate conversational behavior. Our research combines corpus-based analysis with pattern recognition, multimodal behavior realization, and perceptual evaluation.

For a human to have real-time conversations with intelligent (virtual) agents, at HMI, we jointly address three aspects: behavior sensing, modeling and generation. Using computer vision and speech technology, we observe the human conversational participant’s body and head movements, facial expressions, and paralinguistic vocalizations. We detect social cues, which are informative of the stance and intentions of the participant. These are used to model the interaction, and to generate the agent’s appropriate conversational behavior. Our research combines corpus-based analysis with pattern recognition, multimodal behavior realization, and perceptual evaluation.

Storytelling and serious games

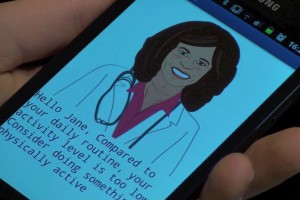

In day-to-day life, people can benefit from systems that coach them to improve their awareness and their skills at certain tasks. This coaching can take many forms: from gently suggesting a workout (for improved health) to presenting difficult challenges in serious games . At HMI, we investigate how we can make these systems enjoyable and motivating to use. We achieve this through the use of advanced storytelling techniques such as emergent narrative, and through serious games that adapt their game mechanics to their players’ style so that these games become more effective. We work on mobile, distributed multi-device coaching and multimodal training games, using embodied agents and interactive narrative to provide context-adaptive feedback. Applications areas are, for example, social awareness training for police or motivational health coaching for rehabilitating people.

In day-to-day life, people can benefit from systems that coach them to improve their awareness and their skills at certain tasks. This coaching can take many forms: from gently suggesting a workout (for improved health) to presenting difficult challenges in serious games . At HMI, we investigate how we can make these systems enjoyable and motivating to use. We achieve this through the use of advanced storytelling techniques such as emergent narrative, and through serious games that adapt their game mechanics to their players’ style so that these games become more effective. We work on mobile, distributed multi-device coaching and multimodal training games, using embodied agents and interactive narrative to provide context-adaptive feedback. Applications areas are, for example, social awareness training for police or motivational health coaching for rehabilitating people.

Brain-Computer Interaction

Brain-Computer Interfaces (BCI) provide a novel channel of information. They acquire input information directly from the brain itself, using EEG or similar techniques. At HMI we research various aspects of BCIs, and especially also their possible roles in the enrichment of Human Computer Interaction. We have developed and evaluated BCI-controlled games incorporating custom BCI pipelines, using various types of feedback as well as various mappings of mental tasks to in-game actions. Finally, we also look at the impact of brain-computer interfaces on society and ethical and moral issues related to the research, development and exploitation of such neurotechnologies.

Brain-Computer Interfaces (BCI) provide a novel channel of information. They acquire input information directly from the brain itself, using EEG or similar techniques. At HMI we research various aspects of BCIs, and especially also their possible roles in the enrichment of Human Computer Interaction. We have developed and evaluated BCI-controlled games incorporating custom BCI pipelines, using various types of feedback as well as various mappings of mental tasks to in-game actions. Finally, we also look at the impact of brain-computer interfaces on society and ethical and moral issues related to the research, development and exploitation of such neurotechnologies.

Language and multimedia: analysis, retrieval and interaction

Human interaction with large multimedia collections relies on the automated content analysis for indexing and annotation purposes. HMI researches models for the automatic analysis of data by exploiting the synergy between information retrieval, speech and language processing, and database technology.

Human interaction with large multimedia collections relies on the automated content analysis for indexing and annotation purposes. HMI researches models for the automatic analysis of data by exploiting the synergy between information retrieval, speech and language processing, and database technology.

We cover a variety of data types, including text, speech and video. Application domains range from cultural heritage and biomedicine, to print, broadcast and social media. Specific research topics include information extraction, semantic access, federated search, multimedia information retrieval, multimedia linking and audiovisual data structuring. We also develop tools to support scholarly work in various disciplines in the social sciences and humanities, based on the automatic analysis of text and speech. Examples include improving automatic cyber-bullying detection, search and navigation tools for oral history and classification of folktales and online narratives.