Toolbox

Designing assessment

The first step of the assessment cycle is deciding on your assessment design. You start by formulating your learning objectives, thinking about the purposes of the assessment, choosing a suitable method (or more than one), and bringing it all together in your assessment scheme. Then you construct the assessment specification table, as the blueprint for a specific assessment method you will use. With these sound preparatory actions, you will be well on your way.

- Prerequisite: Formulating learning objectives

The essence of learning outcomes and how to formulate them

Why are learning objectives so essential?

Clear and well-formulated learning outcomes guide all decisions you need to make regarding the teaching and learning activities and assessment in your course (see also: Constructive alignment).

NB. We use most of the time the term learning objectives but the term intended learning objectives or outcomes (ILO's) is also often heard or learning goals.

Advantages of formulating learning objectives:- it provides focus. It shows what is important and what the general level of learning is.

- it is a way of sharing your ideas about the learning objectives within your programme. Peers, educational designers, and colleagues can give advice and feedback. You and others can see and discuss how a course fits into the curriculum plan and relates to other courses.

- it gives guidance on the design of your assessment.

- it gives guidance on the design of your teaching activities.

- it gives guidance on the materials you need for your students.

- it it gives direction to the students' learning process.

How to write clear learning objectives?

It is important that you formulate clear learning objectives. But how can you realize this? A good notion about how to write clear learning objectives is provided in the following video:

To summarize:

- You start with: At the end of the course, the student is able to... Or make it a bit more personal: ... you will be able to....

- Outcomes should be observable and measurable. They demonstrate that students have learned knowledge, achieved skills and competencies at a specific proficiency level.

- Use active verbs. Verbs like: write, draw, tell, analyze, calculate etc. Avoid verbs that are rather vague and ambiguous, like: think, understand.

- Be as specific and clear as possible.

Action verbs

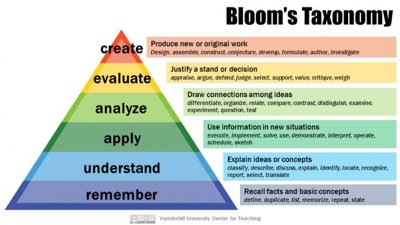

Benjamin Bloom (1956) designed a taxonomy for learning objectives in the cognitive (knowledge-based), affective (emotion-based), and psychomotor (action-based) domain.

Benjamin Bloom (1956) designed a taxonomy for learning objectives in the cognitive (knowledge-based), affective (emotion-based), and psychomotor (action-based) domain.

The cognitive domain taxonomy is mostly used in the education context. The Bloom structure helps to structure and align the learning objectives, assessments, and teaching activities. The most important thing is that this model may help you to think about what you really want the students to be able to at the end of your course and to specify this as specifically and clearly as possible. See it as an aid.

On the right, we show you the taxonomy in a picture. This is the revised version by Anderson & Krathwohl (2001). A lot of information and nice models can be found on the Internet when you search for Bloom's taxonomy. See also some suggestions further below.For those who like to get more ideas:

Would you like to have some support in formulating your learning objectives? This Learning Objective Maker (easygenerator.com) might help.

Hand-out Writing learning outcomes: A very helpful, practical article about formulating learning objectives. In this handout, you can also find information about Benjamin Bloom's taxonomy. Bloom identified several levels, each with a list of suitable verbs, for describing levels in objectives. The levels are arranged from the least complex levels of thinking to the most complex levels of thinking. NB. We are not sure about the copyright. If you know more about it, we would like to give credit.

Alternatives for Bloom?

Bloom is very common, but there are other taxonomies. One of the more well-known ones is the Structure of the Observed Learning Outcome (SOLO) taxonomy, a framework devised by John Biggs and Kevin Collis (1982). For more information, see, for instance: Align with taxonomies - Learning and Teaching: Teach HQ (monash.edu).Some more interesting links, videos, and documents:

- For an elaborate list of verbs you can use, have a look at this site, Bloom's Taxonomy | Center for Innovative Teaching and Learning | Northern Illinois University, or this one, Verbs and Outcomes Based on Bloom’s Revised Taxonomy.docx | Powered by Box

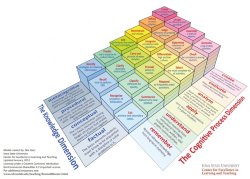

If you want to go a step further... You can combine the Bloom levels with the so-called "Knowledge Dimensions", going from concrete to abstract: factual, conceptual, procedural, and metacognitive. Then you get a two-dimensional model. As you see on your right. To read more about this: Revised Bloom's Handout

If you want to go a step further... You can combine the Bloom levels with the so-called "Knowledge Dimensions", going from concrete to abstract: factual, conceptual, procedural, and metacognitive. Then you get a two-dimensional model. As you see on your right. To read more about this: Revised Bloom's Handout- Here is a Video about Bloom's Taxonomy (external site).

- Bloom's taxonomy of learning domains: Nice examples of the link between the levels and teaching activities.

- Revised Bloom’s Taxonomy – Center for Excellence in Learning and Teaching (iastate.edu)

- A very useful site about learning objectives (and much more), is the site from Eberly Center from the Carnegie Mellon University.

- Decide on the purpose of your assessment

What is the purpose of your assessment?

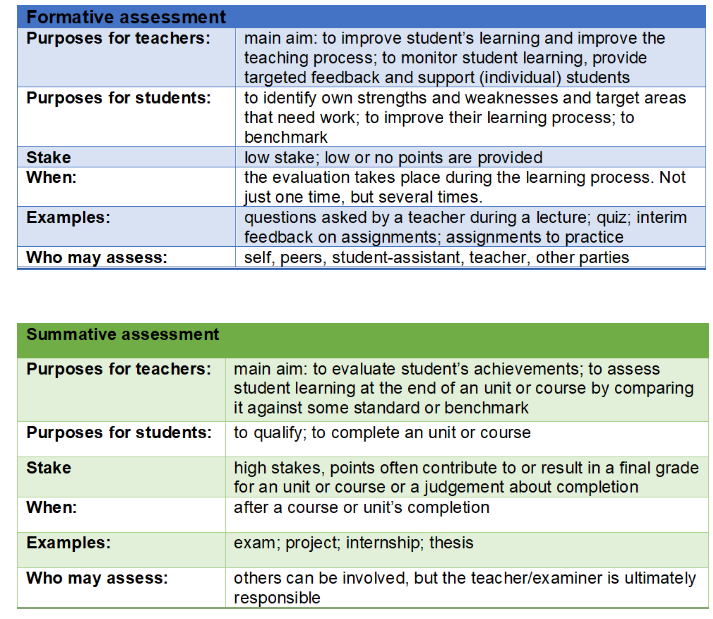

Two main distinctions regarding the purpose for assessing students are often made: assessment for formative purposes and assessment for summative purposes. In the image below the differences are indicated.

It is important to realize that the distinction is about the purpose of assessment and not about the method used. A multiple choice (MC) test can be used for a summative purpose; based on the scores, students get a grade for the course. An MC test can also be used during a course for a formative purpose; to let the students check how much of the content they've already mastered and for the teacher to see whether extra attention is needed for some problem areas.

A main characteristic of formative assessment is that it involves feedback, whether the feedback is based on self-assessment, peer assessment, or feedback from the teacher or teacher assistants. It might be feedback provided on paper or in Canvas (model answers to check homework questions, for instance) or plenary during a lecture. The main goal is to support the students in their learning process. Intermediate feedback provided to students when they work on a larger assignment (e.g., a project assignment) can also be seen as formative assessment.TIP: Look under "Choosing a suitable assessment method" for lists with suggestions for assessment methods.

- Choosing a suitable assessment method

How to choose a suitable assessment method?

What to consider when you are looking for a suitable method:

- What is the context? Number of students? Background of the students and experience (e.g. first-year Bachelor versus Master students).

- Most important is the alignment with learning objectives and teaching activities. What kind of evidence(s) will prove the achievement of the learning objectives?

- Does your choice comply with the programme's assessment policy and vision?

- What is the purpose: summative and/or formative? If formative: How to make it useful for checking progress or for identification of deficiencies? How to design it so that it supports the learning process of the students?

- Will you assess individuals or groups? Or both?

- Who will be involved in the assessment process? Who will assess (self, peers, tutors, teacher, external parties)?

- Does the assessment method suit your experience, or do you have ways to learn about the method or get assistance?

- Is it practical and efficient? Is it doable (workload for students as well as teachers and others involved)?

Advise: In general, if you teach a substantial education unit, it is recommended to use different types of assessment methods. This way, you get a broader picture of the capabilities of the students; it will suit different kinds of learning styles and capabilities, and it might be more motivating for students. But whether this is doable and efficient can be an important consideration and might limit the options.

What kind of assessment methods are possible?

Several possibilities might be suitable for assessing your learning objectives. The Centre for Teaching Excellence, University of Waterloo, provides a nice overview of all kinds of assessment methods: Types of assignments and tests.

For more ideas, you might also look at Alternative assessment and Effective and efficient assessment

You can also consider the possibilities of digital tools. The UT has a license for Contest, Remindo, and Grasple (limited) for written tests. The UT also has a license for Wooclap, a tool that can be used formatively to ask questions during lectures. On this site, you can find information about educational applications that can be used at the UT: Overview educational applications.For those who like to get more ideas:

- This is a very nice overview of assessment methods from A-Z. Links to an external site.

- Aligning Outcomes, Assessments, and Instruction | Centre for Teaching Excellence | University of Waterloo (uwaterloo.ca)

- An overview linking assessment methods to Bloom's taxonomy BloomwAssessandTestingJan08Finl (wordpress.com)

- Nice list of formative assessment activities, including explaining what you can do with the data to analyze students’ understanding or progress: Selecting and Using Activities and Assessments | Office Of The Associate Vice President For Academic Health Sciences (umn.edu)Links to an external site. / active_learning_activities_and_assessments.pdf (umn.edu)Links to an external site.Links to an external site.

- For formative assessment during lectures and tutorials, you can use questions and answers, or a quiz, for instance, but there are a lot of other possibilities. Look [here] for some nice ideas.

- You've learned about Bloom's taxonomy. The University of Waterloo provides an elaborate overview of Bloom's Taxonomy versus learning activities, versus assessment methods. See: Bloom's Taxonomy Learning Activities and Assessments (external site).

- An interesting way for students to engage in formative assessment is the IF-AT aka Scratch Cards | Learn TBL method.

- Assessment scheme

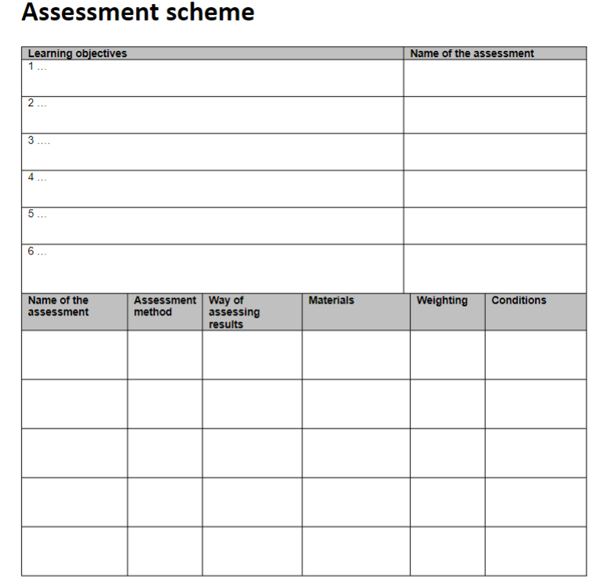

How to create an assessment scheme for your course

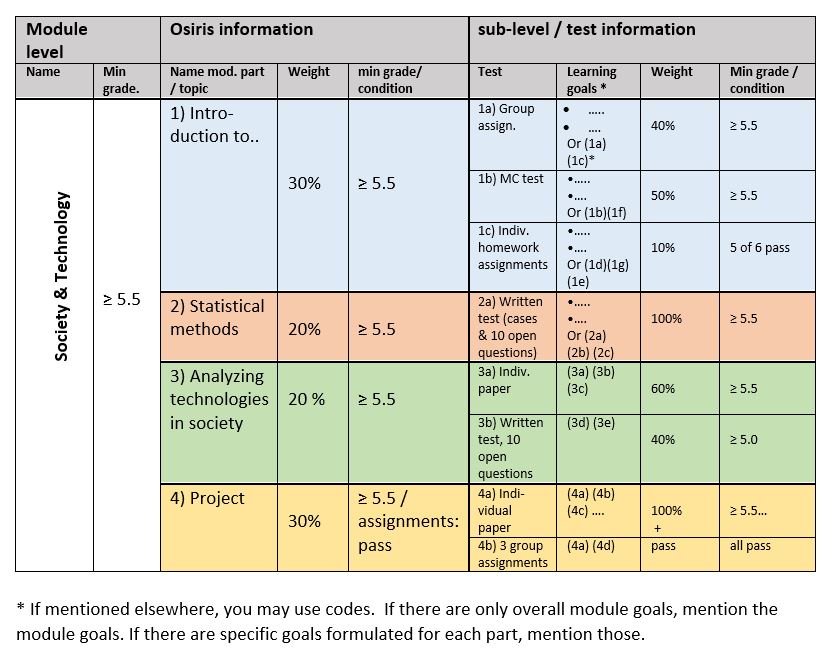

An assessment scheme (aka test plan, assessment plan) helps to show the validity of your assessment for a course (unit, module).

It provides an overview of all assessments in relation to the learning objectives and with weights and conditions.

At the UT it is expected that the above-mentioned information is presented to the students before the start of a course. As a teacher, you are expected to be able to design an assessment scheme for your course or unit.

You can use different kinds of formats. Below is an example of a format for a course (or education unit within a module), and further below is an example of how an assessment scheme for a module might look.

- Assessment specification table

An assessment specification table a blueprint for your assessment

What is an assessment specification table for?

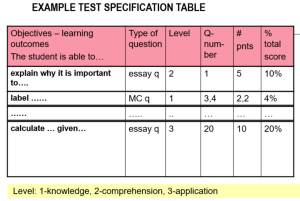

Let us say you are constructing a written test. In a test, you cannot ask for everything the students have had to learn. The test will always use a sample of questions to determine what the students have learned. But how can you be sure that the sample is representative of the subject matter? That the learning objectives are well covered? That the questions asked are on the right level (of Bloom)? That your resit test will be similar to your first test? That if you use a database of questions, each test is similar?

Let us say you are constructing a written test. In a test, you cannot ask for everything the students have had to learn. The test will always use a sample of questions to determine what the students have learned. But how can you be sure that the sample is representative of the subject matter? That the learning objectives are well covered? That the questions asked are on the right level (of Bloom)? That your resit test will be similar to your first test? That if you use a database of questions, each test is similar?

An assessment specification table (aka a test specification table or matrix) can help you with this. It can be seen as the blueprint. It helps the examiner to construct a test that focuses on the key areas and weights different areas based on their importance.

A test specifications table provides evidence that a test has content & construct validity. Construct validity means: Does the test measure the concept that it’s intended to measure? Content validity means: Is the test fully representative of what it aims to measure? (To read more about relevant criteria, see [The four types of validity.].)A format can be downloaded [here].

An assessment specification table for assignments

An assessment specification table is not only useful for written tests. It can also be used for assignments, but it will look different.

See the format below.

NB. If you use a rubric or checklist with criteria and/or the learning objectives will be assessed with several products, it might become a bit of double work or complicated to create an insightful specification table. See if it works for you; it might give extra insight. If not, keep in mind that it is important to check whether the criteria and learning objectives are aligned.

>> A format for assignments can be downloaded [here]. - Student involvement in assessment

Students learn a lot when involved in their learning process (Vermunt & Sluijsmans, 2015). They can also learn a lot when you involve them in the design process of your education, including assessments (Bron & Veugelers, 2014).

Some ways to involve students in summative and formative assessment

- Involve students in the choice of assessment method(s)

- Involve students in formulating the assessment criteria for assignments or creating rubrics

- Let students decide which medium to use to demonstrate what they have learned (e.g., video, podcast, poster, etc.)

- Stimulate students in self-assessment. A quiz, checklist, or rubric can be used to guide them.

- Make use of peer assessment. Guidelines and criteria will help them.

- Involve students in creating test questions.

- Discuss homework assignments or e.g., test example questions, in class. Start by letting them share and discuss their solutions with peers.

"Students can assess themselves only when they have a sufficiently clear picture of the targets their learning is meant to attain" (Davies, 2011)

Besides this quote, a nice model and information can be found on the following site: Involve Students - Assessment methods (weebly.com)