Overview

In the coBOTnity project we have developed technology to build interactive playful environments for creative storytelling. Here you find an overview of the main components and various applications developed as part of coBOTnity.

Surfacebot embodiment

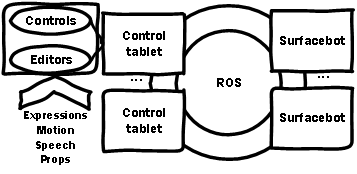

We have developed the concept and implementation of surfacebot. It is intended as a movable character that is embodied in a tablet display attached to a robotic platform. It is capable of rendering facial expressions, speaking short utterances, moving, and displaying visual assets.

A variable number of surfacebots can be dynamically added in a play activity, where all devices are technically connected through a ROS overlay network via WiFi. The system supports the connection of control tablets dynamically as well, which can be used to either enact the story behaviour or create content.

Assets editors

Beside controlling, there a number of editors to create user assets that can be included in activities. These support the inclusion of Text-to-Speech, recorded audio, visual assessts based on images from the internet or drawn on the tablet itself. The sequencing of assets as compound behaviors is possible in the backend.

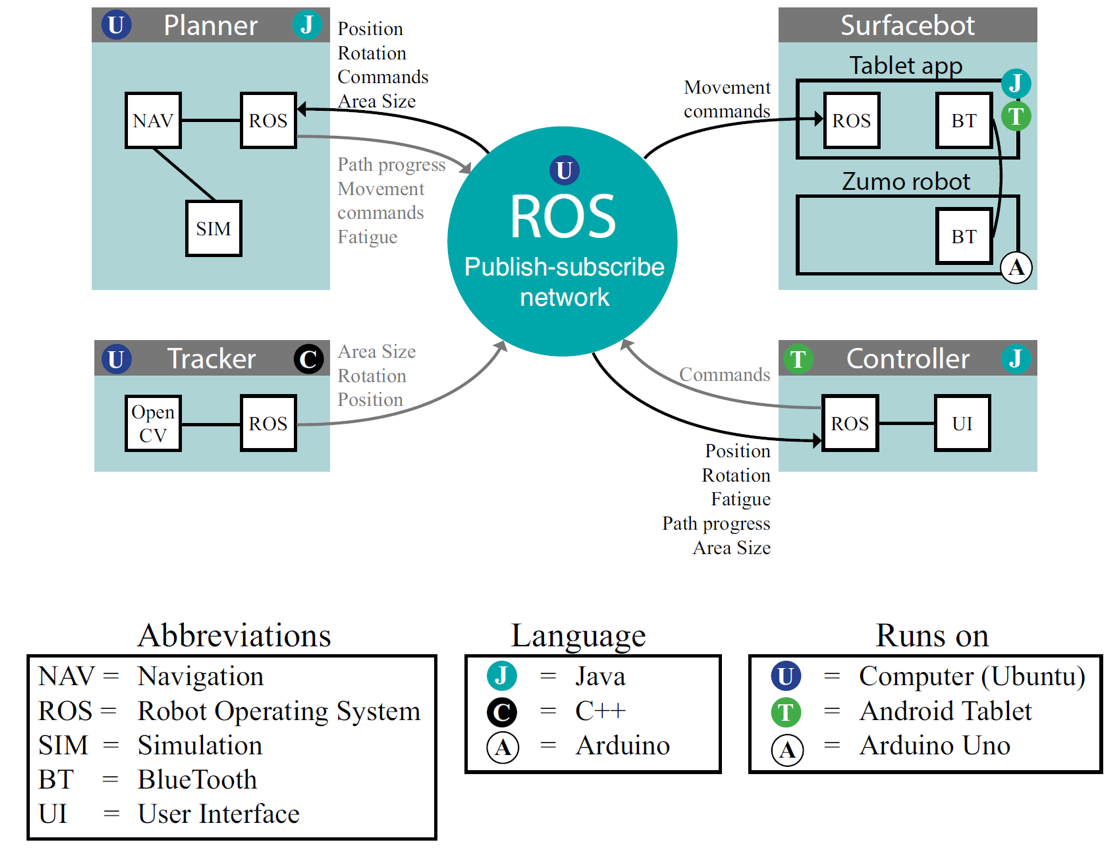

Smart Navigation

The system prototype is expanded with smart navigation features to prevent children spending cognitive resources in driving bots. Children can give single destinations or paths for the bots to follow. When giving paths, users can include modifiers at any point/landmarks in the planned path. These modifiers execute behaviours when the robots reach the landmarks. For example, existing facial or voice behaviours of the character as well as movement behaviours such as setting rotations, stop-and-go, and dancing can be scheduled as modifiers. Besides the general collision avoidance based on RVO2 library, some social behaviours such as following specific characters, running away from another bot and keeping a distance, or facing at the audience after reaching destinations are implemented features.

Additionally, there is a fatigue model implemented for the surfacebot that controls the gain and depletion of energy. If children tend to do continuous driving rather than storytelling, this feature forces them to play with story elements as the surfacebot will have to stop to recover energy.

The figure depicts how the architecture has been realized into different implemented components.

Special credits: Roberto Campisi, Luce Sandfort, Wouter Timmermans, Wouter van Veelen (CS bachelor students)

Enhanced storytelling setting

We have explored how to mediate interaction in the storytelling setting via a tablet app. The features in the enhanced setting support children in developing storytelling skills, helping them to structure the creation of stories and reflect on the links between the different events. The app facilitates the transition from free play towards more reflection in the child's storytelling process.

Special credit: Silke ter Stal (HMI master student)

Social-emotional development support

Enhancing children’s social awareness and their responses on social issues is also important. Through stories, they can safely experience social situations. The platform has been used in experiments to explore how social-emotional development can be affected by the format of the storytelling table and the level of assistance/responsiveness of the system. Storytelling tables enhanced by technology can be useful to reinforce emotional understanding and story event recall.

Special credits: Iris Visser, Hannie Gijlers

Creative dancing Tablets

Following user-centered methods, we have designed, implemented and evaluated a dancing robotic tablet prototype for co-creative human-robot interaction. Two types of autonomous robot behavior were considered as creativity support and evaluated in a user study. While imitation behavior was perceived as more intelligent; the generation behavior that attempted to challenge users and be different to the users’ input led to a greater variety of gestures. Observations support the idea that users were inspired to some extent by the robot’s input.

Special credits: Federico Fabiano, Hannah Pelikan, Jelle Pingen, Judith Zissoldt (HMI master students)

Enabling Emotion-aware Surfacebots

The middleware can be quickly integrated with other components used in robotic or HRI systems. For example, we have enabled the agents to be emotion-aware to implement further applications in which the characters can react emotionally based on the arousal of children. This uses the emotion recognizer based on speech developed by Jaebok Kim in the EU-funded project SQUIRREL.

Find out more about Jaebok's work here.

Second language learning

We used a surfacebot for second language learning by primary school children, combining storytelling with a learning-by-teaching approach. In the story, the robot is an elephant who is visiting France, but doesn't know the language. The elephant can visit different locations on the tabletop, where he finds objects that he can use in his adventures if he learns the French words for them. The child sees the words displayed on a graphical interface, together with their corresponding image, and can “teach” them to the elephant by dragging them to an image of the elephant. Our tests showed that the robot kept the children engaged throughout the learning activity. There also was some gain in words learned by the children.

Special credit: Gijs Verhoeven (I-Tech master student)

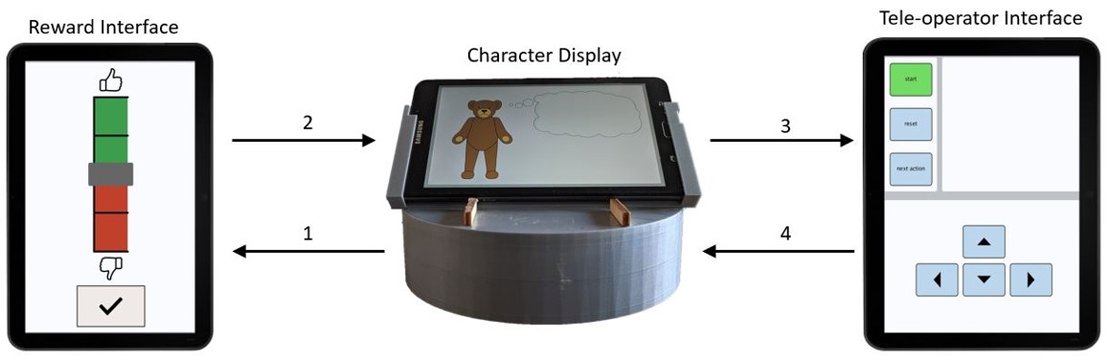

Encouraging collaboration between children

To encourage children to collaborate while teaching the surfacebot, we created a story that portrays the surfacebot as a bear called Ted (shown on the surfacebot’s tablet screen) who wants to get dressed to go outside, but needs the help of the children to find the right clothes, as he does not know which clothes fit the weather. The clothing items are spread across locations on the tabletop, representing different rooms in the bear’s house. The surfacebot moves around these locations (secretly steered by a tele-operator) and selects clothes to wear. Children can provide feedback on the surfacebot’s actions using the reward interface: a slider on a tablet. The surfacebot uses reinforcement learning to learn from the feedback and adjusts its decision making based on it.

Special credit: Wouter Kaag (I-Tech master student)

Software releases

Some of the software code is available at gitlab.

Core coBOTnity Apps:

https://gitlab.com/cobotnity/cobotnityapps

Surfacebot Smart Navigation middleware and apps:

https://gitlab.com/cobotnity/SurfBotSmartNav

Further helper software: