A disordered network that is capable of detecting ordered patterns: it sounds contradictory, but it comes close to the way our brain works. Researchers of the University of Twente developed a brain-inspired network like this. A big step forward is that is based on silicon technology and can be operated at room temperature. It makes use of material properties that electronic designers usually like to avoid. Thanks to ‘hopping conduction’, the system evolves to a solution without making use of predesigned elements. The researchers publish their work in Nature of January 15, 2020.

Our brain is very powerful in recognizing patterns. Artificial Intelligence can do better in some cases, but this comes with a prize: it takes huge computing power whereas the brain only consumes 20 Watt. The semiconductor industry already starts embracing new computer design strategies that come closer to the way the brain works, like Intel’s Loihi processor that has neurons and synapses. Still, mimicking one single neuron takes thousands of transistors – our brain has tens of billions of neurons. Miniaturization is still an answer to these large amount, but this, too, reaches physical limits. The ‘disordered dopant atom network’, now presented in Nature is a fully different approach: it doesn’t use predesigned neurons or other circuitry, but makes use of material properties to evolve towards a solution. This highly counterintuitive approach proves to be energy efficient and doesn’t use much surface space.

Hopping

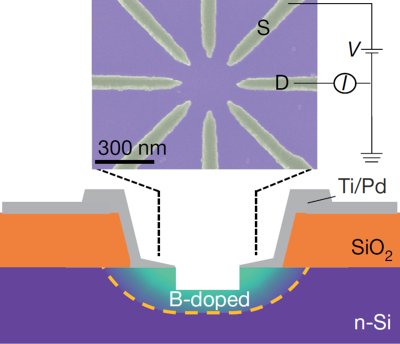

'Doping’ is the key word in this. In electronics, this is the well-known way of influencing the properties of transistors by deliberately introducing impurities in the silicon crystal. The concentration must be high enough to reach the desired effect. Using a much lower concentration instead, , of boron in this case, results in a regime that circuit designers prefer avoiding. And that’s exactly the regime in which the disordered network operates. Conduction now takes place via electrons hopping from one boron atom to another: ‘hopping conduction’ is, in a way, comparable to neurons seeking collaboration with other neurons to make a classification. As an example, the network is fed with 16 basic, four digit patterns. Each pattern results in a different output signal. With these 16 as a basis, it is possible to recognize a database with handwritten letters with high accuracy and speed, for example. The basic component is now 300 nanometer in diameter, has about 100 boron atoms and consumes about 1 microWatt of power.

Top view (scanning electron microscopy) and side-view (drawing) of the boron-doped structure

Less data transport

In future systems that use this type of networks, pattern recognition can be done locally, without using distant computing power. In autonomous driving, for example, many decisions have to be made based on recognition. This involves either a big onboard computer system or high-bandwith communication with the cloud, probably even both. The new brain-inspired approach would involve less transport of data, so the car manufacturing industry is already interested in the new UT approach. This type of computing, called ‘edge computing’ can also be used for face detection, for example.

The research was done at the Center for Brain-Inspired Nano Systems (BRAINS) of the University of Twente, a multidisciplinary center that was opened in 2019. The group NanoElectronics (MESA+ Institute) and the group Programmable Nanosystems (Digital Society Institute) participated in this project, together with the Center for Computational Energy Research of the Technical University of Eindhoven. It was made possible through financial support of Dutch Research Council NWO.

The paper ‘Classification with a disordered dopant atom network in silicon’, by Tao Chen, Jeroen van Gelder, Bram van de Ven, Sergey Amitonov, Bram de Wilde, Hans-Christian Ruiz Euler, Hajo Broersma, Peter Bobbert , Floris Zwanenburg and Wilfred van der Wiel, is published in Nature of 15 January 2020. In the same issue, a review by Nature: 'Evolution of circuits for machine learning'.

More recent news

Mon 24 Nov 2025A new route to optimise AI hardware

Mon 24 Nov 2025A new route to optimise AI hardware Wed 17 Sep 2025Material that listens: Twente breakthrough in speech recognition

Wed 17 Sep 2025Material that listens: Twente breakthrough in speech recognition Fri 13 Jun 2025Can the brain help us fix AI's energy problem?

Fri 13 Jun 2025Can the brain help us fix AI's energy problem? Tue 10 Jun 2025Two Brains Centre projects receive NWO KIC funding

Tue 10 Jun 2025Two Brains Centre projects receive NWO KIC funding Thu 3 Apr 2025Een computer op je hersenen geïnspireerd. Is dat de toekomst?

Thu 3 Apr 2025Een computer op je hersenen geïnspireerd. Is dat de toekomst?