Embedded Machine-Learning graduation projects with NXP AI-Team Eindhoven

At the Central Technology Organization (CTO) of NXP Semiconductors Eindhoven, we are currently looking for motivated students to support us in a variety of ML-related projects. Machine Learning and especially Deep Learning will not only enable, enhance, and nourish innovative customer applications, but also help us improve both NXP software and NXP hardware driving exciting future applications. The projects are executed within a driven team of about seven AI experts (2 MSc./ 1 PDEng./ 4 PhD.) with connections to different parts of the large NXP organization. We typically host 5 students any given moment in time, so you will be part of an enthusiastic, collaborative group with plenty of thinking power.

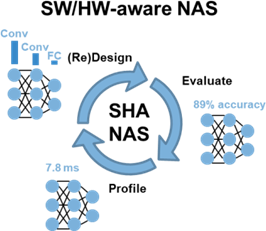

Neural networks are increasingly successful for a wide variety of tasks. Nevertheless, the design of these neural networks requires expert skills on several levels. On one side, a task-specific neural architecture achieving considerable accuracy needs to be designed. On the other side, real-time requirements and energy consumption budgets of the network deployment need to be met especially in the embedded domain. This often requires substantial manual effort, network redesign, retraining, etc. A popular strategy to resolve this is multi-objective Neural Architecture Search (NAS), where a network architecture may automatically be designed for both efficiency and accuracy.

We are looking for students to support us as part of their internship or graduation project on the following topics (see next page), touching different domains and technology focus areas. The concrete assignments will be formalized in discussion with the student and university supervisor, to match the interest and capabilities of the candidate best. For assignments, the NXP AI ecosystem and compute resources will be provided.

If you are interested and want to learn more, please reach out in a short email with your current CV and list of grades to one of:

- Willem Sanberg: willem.sanberg@nxp.com

- Hiram Rayo Torres Rodriguez: hiram.rayotorresrodriguez@nxp.com

- Nick van de Waterlaat: nick.vande.waterlaat@nxp.com

Scalable Neural Architecture Search

NAS is an attractive methodology to design and optimize good and efficient Neural Networks, but expensive for large scale models and or on high-bandwidth datasets. To enable NAS for a wide variety of domains requires exploring, improving and inventing e.g.:

- Zero-cost proxies to improve guidance in search space exploration

- Automatic efficient search space design

- Surrogate models to speed up candidate model evaluation (both how to collect data, how to design and how to exploit; covering multiple objectives)

- Low-fidelity estimators and prioritization techniques (training effort schedulers such as ASHA, weight sharing, learning curve extrapolation, …)

Design and automated optimization of DNNs for radar-based ADAS

- Improving state of the art approaches on object detection, classification, and segmentation in radar spectrum and/or 'point cloud' data with neural network architectures;

- Leveraging radar-domain specifics to improve reliability or efficiency of the DNN;

- Leveraging ML and NN-design know-how from other domains (e.g., transformer models for computer vision) for Radar signal processing;

- Optimizing simultaneously the deployment on target hardware and the accuracy on task/dataset via e.g. Neural Architecture Search (NAS).

Efficient Transformers

In the context of Efficient Transformers, we want to investigate Transformer optimization techniques to reduce the memory footprint and latency while sustaining ML task performance. Transformers have been shown to effective in many different domains, such as computer vision, audio, natural language processing and time series modelling. Yet, it is of great importance to optimize Transformer models for efficient inference on Edge AI devices, as Transformers have typically large number of parameters and high compute cost. There are a number of different approaches for optimizing Transformers, such as Quantization, Structured Pruning, Token Sparsification and Neural Architecture Search (NAS). We are particularly interested in researching and exploring Token Sparsification and NAS methods: How does these techniques compare to these other techniques ?. What benefits, challenges and limitations do they bring ? and how can we perhaps utilize this technique in conjunction with other methods to derive maximally optimized models with little/no accuracy degradation.

Automatic joint design and optimization of neural networks

- Neural Networks can be made more efficient and more accurate through a wide variety of techniques (Neural Architecture Search, Quantization, Pruning,…), but it is an open question on how and when to leverage these techniques in combination.

- Hence, this project will investigate the integration of orthogonal optimization techniques. We select a subset of techniques to enable and explore based on interest, applicability and feasibility, the scope of which can be extended gradually where possible and relevant, e.g.:

- How to exploit data-free optimizations (e.g., data-free quantization, distillation, etc.) and in which stage of the full pipeline?

- For early exit networks: how to identify optimal exit points through NAS?

Domain generalization over radar sensors, configurations and datasets

- Explore the extent of domain gaps in Radar DNNs over different sensors and configurations;

- Investigate & improve sota sensor domain generalization techniques for radar-based ADAS;

- Leverage data-efficient sensor domain gap mitigation, e.g. via active learning.

On-device learning/federated learning on limited resource devices

- Addressing the following questions from NXP perspective:

- What is the latest state of the art for embedded on-device learning?

- How does on-device training differ from a regular (desktop/cloud) backprop-setup?

- Are approaches using one/few-shot training efficient and competitive?

- What deployment toolchains exist for efficient embedded on-device learning?

- What are the requirements from a HW-perspective?

- What are opportunities for improving on-device learning through federated learning?