Head Gesture Recognition With EarableS

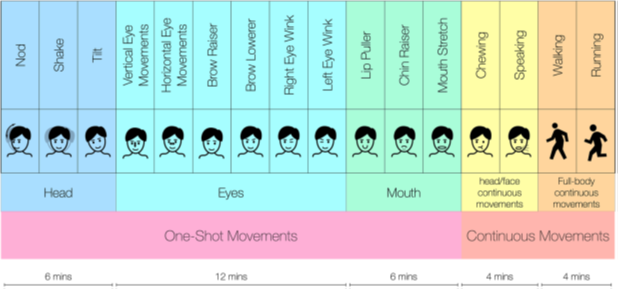

Image credit: Montanari, A., Ferlini, A., Balaji, A. N., Mascolo, C., & Kawsar, F. (2023). EarSet: A Multi-Modal Dataset for Studying the Impact of Head and Facial Movements on In-Ear PPG Signals. Scientific Data, 10(1), 850.

Introduction

This project investigates the feasibility of using earables to recognize user gestures based on a combination of motion data and physiological signals. The project leverages the EarSet dataset, which includes data from in-ear photoplethysmography (PPG) sensors and co-located motion sensors (IMU) worn by participants performing various head and facial movements.

Objectives

The aim is to explore the EarSet dataset for detecting head-related activities/gestures. You are expected to select and train machine learning models to classify user gestures based on the extracted features. The ultimate objective is to test the accuracy and robustness of the developed model on unseen data.

Work

40% Theory, 40% Programming, 20% Writing

Contact

Özlem Durmaz İncel, Associate Professor, Pervasive Systems (ozlem.durmaz@utwente.nl)