The AI Act covers all types of AI across a broad range of sectors, with exceptions for AI systems used solely for military, national security, research and non-professional purposes. As a piece of product regulation, it does not confer rights on individuals, but regulates the providers of AI systems and entities using AI in a diverse set of contexts.

Notably, research is not mentioned in the AI Act, as it is not meant to be an instrument to regulate research activities as such. However, its content and purpose can be of inspiration for research activities around AI.

The new rules:

- address risks specifically created by AI applications

- prohibit AI practices that pose unacceptable risks

- determine a list of high-risk applications

- set clear requirements for AI systems for high-risk applications

- define specific obligations deployers and providers of high-risk AI applications

- require a conformity assessment before a given AI system is put into service or placed on the market

- put enforcement in place after a given AI system is placed into the market

- establish a governance structure at European and national level

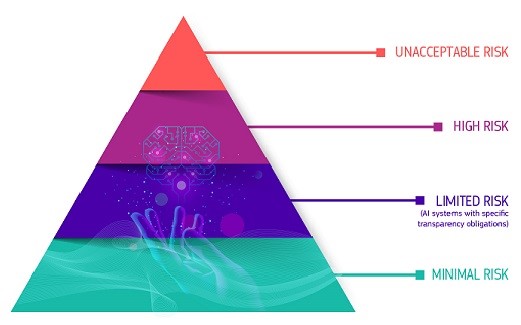

The AI Act adopts a risk-based approach, as shown in the picture below (source: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai)